Ultimate Stable Video Diffusion Review: Is SVD the Future of AI Video?

Ultimate Stable Video Diffusion Review: Is SVD the Future of AI Video?

AI video generation is moving fast, and Stable Video Diffusion is quickly becoming one of the most talked about names in the space. Created by Stability AI, SVD brings the familiar magic of Stable Diffusion images into motion, letting users turn prompts and still visuals into short, dynamic video clips.

This Stable Video Diffusion review is designed for creators, marketers, developers, and anyone curious about where AI video is heading next. We will explore what SVD is, how it works, and why it matters right now. Whether you are experimenting with AI visuals for fun or considering real production use, this guide will help you understand what Stable Video Diffusion can realistically deliver today.

What Is Stable Video Diffusion?

Stable Video Diffusion, often called SVD, is an AI video generation model developed by Stability AI. It builds directly on the technology behind Stable Diffusion, which is widely known for high quality AI image generation. Instead of creating a single image, SVD generates a sequence of frames that form a short video.

SVD supports both text-to-video and image-to-video workflows. With text prompts, users describe a scene and let the model imagine motion. With image input, SVD animates a still image while preserving its original style and structure.

At its core, Stable Video Diffusion focuses on smooth motion, visual consistency, and creative flexibility, making it a powerful foundation for future AI video tools.

Key Features of the SVD Video Model

Stable Video Diffusion is not trying to do everything at once. Instead, it focuses on a small set of features and does them well. The result is an AI video model that feels practical, flexible, and surprisingly approachable, even if you are new to AI video tools.

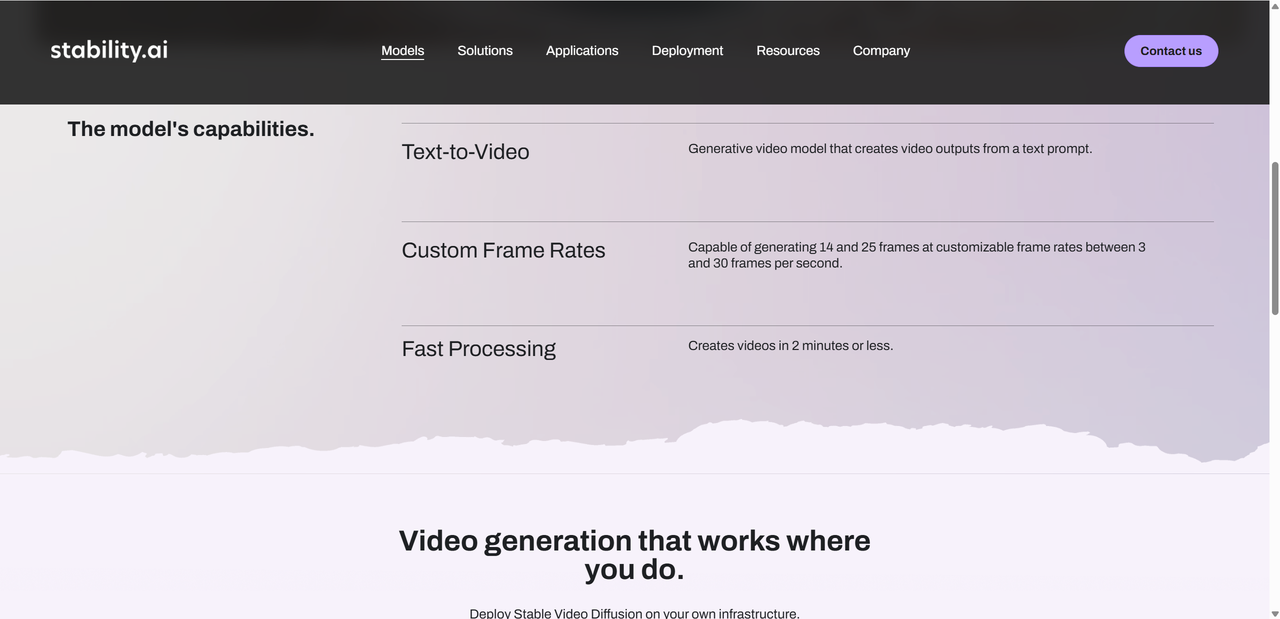

Text-to-Video & Image-to-Video Generation

SVD supports two core creation paths: text-to-video and image-to-video.

With text prompts, you describe a scene, subject, or mood, and the model generates a short video that brings those ideas to life through motion. With image input, SVD animates a still image while keeping the original composition and style intact.

Common use cases include:

Short creative clips for social media

Animating concept art or illustrations

Visual experiments and mood pieces

SVD works best with concise prompts and simple scenes, where motion adds atmosphere rather than complexity.

Frame Rates & Output Specs

Stable Video Diffusion comes in two main versions: SVD and SVD-XT. The XT version focuses on smoother motion by generating more frames.

| Feature | SVD | SVD-XT |

|---|---|---|

| Frames | ~14 | ~24 to 25 |

| Frame rate | 3–30 fps | 3–30 fps |

| Typical duration | 2–5s | 2–5s |

| Best for | Short creative clips | Smoother motion |

Speed & Accessibility

One of SVD’s biggest strengths is speed. Most videos generate in about two minutes or less, depending on hardware.

Access options include:

Self hosting for advanced users

API integrations

Hugging Face demos

Web based previews from Stability AI

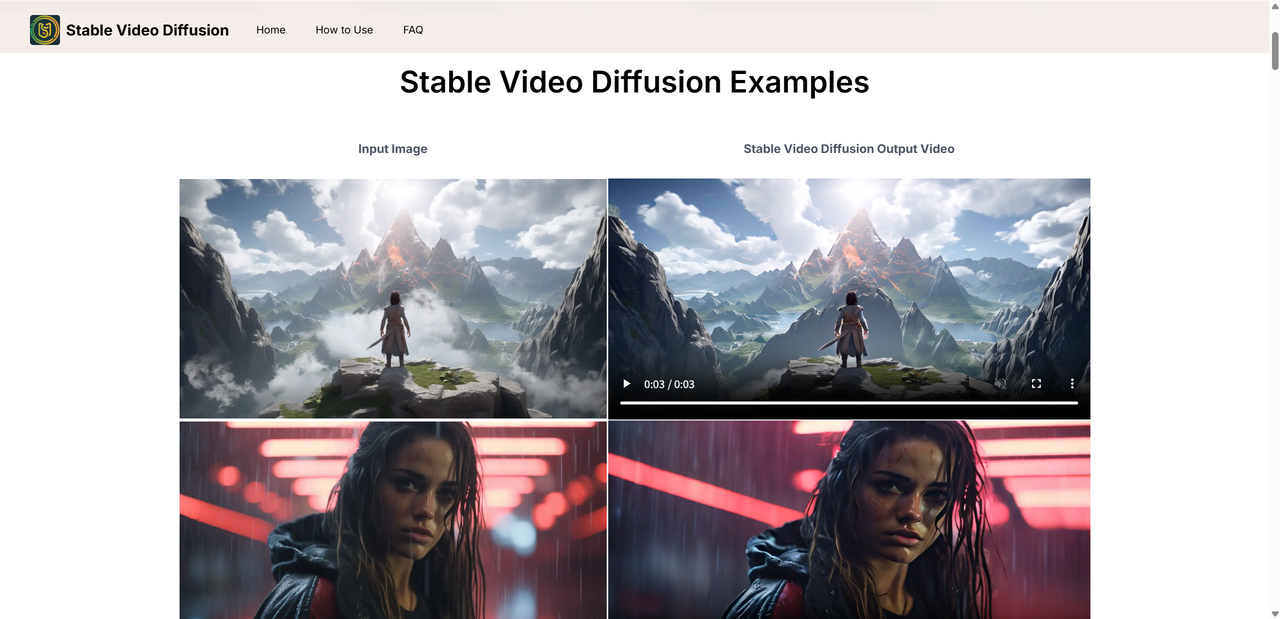

Stable Video Diffusion Test & Examples

To get a real feel for Stable Video Diffusion, hands on testing is essential. In practice, SVD delivers visually pleasing short clips with a strong sense of motion, especially when the input is simple and well defined. The model excels at adding life to static scenes rather than telling complex visual stories.

Motion tends to feel textured and organic, particularly in natural environments. Water ripples, drifting clouds, and subtle camera movement look smooth, especially when using higher frame counts. That said, results vary a lot depending on prompt clarity and image quality. Clean inputs usually lead to cleaner motion.

SVD examples you will commonly see include:

Nature scenes with gentle motion like forests, oceans, and skies

Abstract or artistic transformations with surreal movement

Stylized characters and illustrations with limited motion

SVD video model test results highlights:

Smooth motion improves noticeably with higher frame counts

Looping quirks can appear near the start and end of clips

Artifacts show up more often with faces, hands, and text

Best results come from simple scenes with clear depth

Overall, SVD feels creative and expressive, but not yet precise.

Pros & Cons: Honest Stable Video Diffusion Review

Like any emerging AI tool, Stable Video Diffusion comes with clear strengths and noticeable limits. Here is a balanced look at what works well and where things still fall short.

✔ Pros (What Works Well)

Fast generation with a low barrier for creators

Excellent motion for landscapes, textures, and abstract visuals

Strong visual consistency across frames

Open source ecosystem with growing community tools

Flexible access through self hosting, demos, and APIs

✘ Cons (Limitations Noticed)

Very short video duration, usually just a few seconds

Inconsistent results with human faces and fine details

Text rendering is unreliable and often distorted

Prompt control feels basic compared to image models

Local use requires solid GPU power

Stable Video Diffusion shines as a creative playground, but it is not yet a full production solution.

Stable Video Diffusion vs Alternatives

When you step back and look at the big picture of AI video generation, Stable Video Diffusion (SVD) sits in an interesting spot compared with other popular tools like PikaLabs and Runway. It’s less polished than some commercial offerings, but it brings an open-source ethos and a flexible foundation that’s hard to ignore.

How They Stack Up

| Model | Quality | Open Source | Best Use |

|---|---|---|---|

| Stable Video Diffusion | Medium-High | ✔ | Creative exploration, research, tinkering |

| PikaLabs | High | ✘ | Commercial text-to-video with extra control |

| Runway | High | ✘ | Editing workflows & professional content |

Open Source vs Proprietary

One of the clearest differences is source availability. Stable Video Diffusion is fully open source, meaning anyone can inspect, tweak and host the model themselves. That’s a huge advantage if you like deep control or want to experiment with your own pipelines. In contrast, PikaLabs and Runway are closed commercial systems that trade open modification for smoother user experiences and dedicated support.

Quality & Flexibility

In user preference tests, SVD often holds its own against proprietary models, with many creators enjoying its creative output and frame stability — even though it’s still a research project.Runway and PikaLabs tend to produce higher-fidelity, more polished videos right out of the box, and often offer longer clips with more reliable motion. Runway also integrates video editing tools, while PikaLabs gives users more control over how scenes evolve.

Pricing & Accessibility

Because SVD is open and free, it’s extremely accessible — provided you have the tech knowledge or GPU power to run it. In contrast, Runway and PikaLabs operate on commercial pricing models or usage credits, which may be more expensive over time but make them simpler to use for non-technical creators.

Bottom line: If you love tinkering, want open access, and aren’t afraid of a little setup work, Stable Video Diffusion is a fascinating and cost-effective option. If you’re aiming for plug-and-play video production with polished results, the proprietary alternatives might be a better fit — at a price.

Who Is Stable Video Diffusion Best For?

Stable Video Diffusion is best suited for people who enjoy experimenting, learning, and creating without needing a fully polished production tool. Creators and artists can use SVD to animate illustrations, generate atmospheric clips, or explore visual ideas before committing to a final design. Educators and students benefit from its open nature, making it a strong choice for teaching how AI video models work and for running classroom demos.

Marketers and small studios may find SVD useful for early concept visuals, motion tests, or creative inspiration, especially when budgets are limited. It is also a natural fit for AI enthusiasts and researchers who want hands-on access to a modern video diffusion model.

Because Stable Video Diffusion is released under a research focused license, it is mainly intended for non commercial use. That makes it ideal for exploration and prototyping rather than direct commercial deployment.

Conclusion: Final Verdict on SVD

Stable Video Diffusion may not be a finished, all purpose video solution yet, but it is an exciting glimpse into where AI video is heading. In this Stable Video Diffusion review, SVD stands out as a creative, research driven tool that prioritizes openness, experimentation, and visual consistency over flashy polish. It already delivers impressive results for short clips, especially with landscapes, abstract motion, and artistic visuals.

At the same time, its limitations are clear. Videos are brief, prompt control is still basic, and people or text remain challenging. Still, for creators who enjoy exploring new tools, educators studying AI media, and developers building custom workflows, SVD is genuinely useful today.

If you are curious about AI video and want to see what is possible right now, Stable Video Diffusion is absolutely worth experimenting with. Also worth exploring is Lovart AI, a tool particularly adept at creating videos with consistent animated styles and coherent storytelling.

Partager l'Article